For many years now, we have been hearing about the increased value of data to organizations. Some have even referred to data as the “new oil of the digital economy.” Today, data is arguably the most valuable asset an organization possesses. When organizations provide their staff instant access to these assets, they enrich the organization by enhancing their ability to make better and faster business decisions based on insights derived from that data.

The term “Data Estate” is a relatively new term to IT, and Microsoft has adopted this in their efforts to empower customers in their digital transformation and modernization. For many years, data has been stored in a siloed, segmented manner designed to serve individual business groups and/or applications. In today’s world, unlocking profound insights and driving business transformation with data’s full and unleashed potential requires a modernized data infrastructure that breaks down those silos. Organizations that treat data as a strategic asset can take full advantage of the promise of AI and machine learning. Microsoft continues to lead by example through this strategic cultural shift.

Microsoft has developed an internal model known as the Enterprise Data Strategy. This model could be a tried and tested model for other organizations to utilize and customize further for their own needs. As such, closely examining Microsoft’s own Enterprise Data Strategy can be extremely beneficial for any organization looking to modernize their data estate.

The Enterprise Data Strategy was created with five goals in mind:

- To build a single enterprise destination for high-quality, secure, trusted data.

- To connect data from disparate silos, creating opportunities to leverage that data in ways not possible in a siloed approach.

- To power responsible data democratization across the organization.

- To drive efficiency gains in the processes used to access and use data.

- To meet or exceed compliance and regulatory requirements without compromising the organization’s ability to create exceptional products and customer experiences.

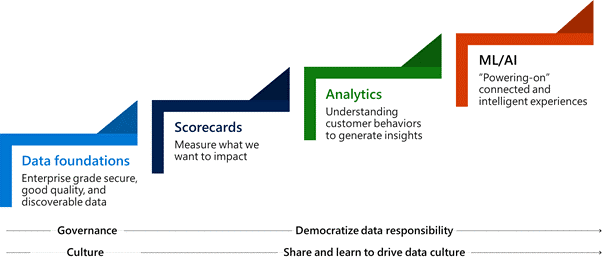

The value of data is directly proportional to the number of people who can connect to and utilize it in meaningful ways. To evolve from unit-level intelligence to a more all-inclusive and connected enterprise intelligence, investment in the elements of the Enterprise Data Strategy (Figure 1) should be a priority.

Figure 1. Building an infrastructure for more intelligent insights and experiences

Investments in these foundational elements should be iterative, and each element builds upon the last. The data foundation provides secure, high-quality, discoverable data. Scorecards measure the impact of that data, analytics extract insights from it, and machine learning (ML) and AI transform it into intelligent experiences. All of these elements are linked by governance services designed to foster the responsible democratization of access to and use of data.

Microsoft Enterprise Data Strategy

We can examine Microsoft’s own model for utilization in our own organizations.

Building modern foundations for trusted and connected data

To create a foundation that enables secure, high-quality, connected enterprise data to be easily discovered, accessed, and used responsibly by teams and users across the enterprise, modernization of the data foundations should be focused on five core pillars:

- Trusted data services to ensure data quality, security, compliance, and governance

- A single source of truth where connected enterprise data is collected, shaped into trusted forms, secured, made accessible, and conformed to applicable governance controls

- Connected data products, including unified master data, data from disparate sources conformed to common enterprise data models, and entity hierarchies

- Modern systems and tools to build and operate data products with sufficient guardrails to prevent improper data proliferation to edge systems and applications

- A unified data catalog for democratized access to the data and data products that teams require to power their own digital transformation

Measuring what matters with metrics and scorecards

To measure the impact of our digital transformation efforts, start by defining metrics that matter with scorecards consisting of:

- Standardized, governed metric definitions for consistent calculations and reporting

- Automated data pipelines for data collection and measurement

- Reporting capabilities that are generated and refreshed automatically, and that include dashboards with trends, dimensional pivots, and self-service capabilities

Generating actionable insights with analytics

Actionable insights are generated from metrics using analytics, usually in the form of dimensional pivots and correlation/causation insights. These insights uncover distinct data states and trends to spur timely action, so robust analytics capabilities include:

- Trusted and connected enterprise data that directly relate to metrics that matter (defined with scorecards)

- Self-service analytic tools that enable data analysts and domain experts to generate actionable insights by querying and/or visualizing data, creating analytics modes, and exploring deeper data correlations

- Tools for data democratization so data analysts and domain experts can publish and share their insights for benefit elsewhere in the company

Converting actionable insights to intelligent experiences with machine learning and AI

With actionable insights created, the next step is turning those insights into intelligent experiences that improve products, increase customer engagement and satisfaction, and boost employee productivity and efficiency. Investments in machine learning and AI support those goals through predictive, prescriptive, and cognitive intelligence that bolsters products and internal systems. This includes:

- Infrastructure to enable data scientists to build and operate ML/AI models, with tools and services to cleanse and prep data when they need to integrate model-specific data with enterprise data

- DevOps services and tools ensuring that when data scientists build, test, deploy, and operate ML/AI models, they do so in a secure, compliant, and scalable way

- A repository of reusable ML/AI models and services that are available to non-data scientists for use in their own products and systems

Democratizing data responsibly with modern data governance

The scale of an organization’s digital transformation should extend to all corners of the organization, not just to data scientists. Data democratization is a driving force in this transformation, and such democratization comes with compliance challenges. Data breaches and compliance violations could not only damage Microsoft’s reputation as a trusted brand, but also create obstacles to achieving a responsible, data-driven culture. Accordingly, the approach to modern data governance should include:

- Forming and staffing a data governance team to define and operationalize modern data governance across the enterprise. (As an example, Microsoft uses a hub and spoke model, with the data governance team forming the hub and data stewards in each team publishing or using data to scale governance practices.)

- Governance processes that utilize either automation or human workflows (or some combination of the two), depending on the goals and context.

- Strong, scalable technological foundations to embed governance practices in data management, data quality management, data security, data access management, compliance, and governance process automation.

Growing and scaling the data community

Since democratized access to data is a foundational goal of the digital transformation, non-technical users and teams will require support and training to evolve their use of data. Foster a community within your organization to train teams and apply shared learnings. Create working groups for data topics, training sessions, consultation forums, and shared sources for this purpose. Such structural change requires strong leadership, buy-in, and momentum.

Implementing the Modern Data Foundations

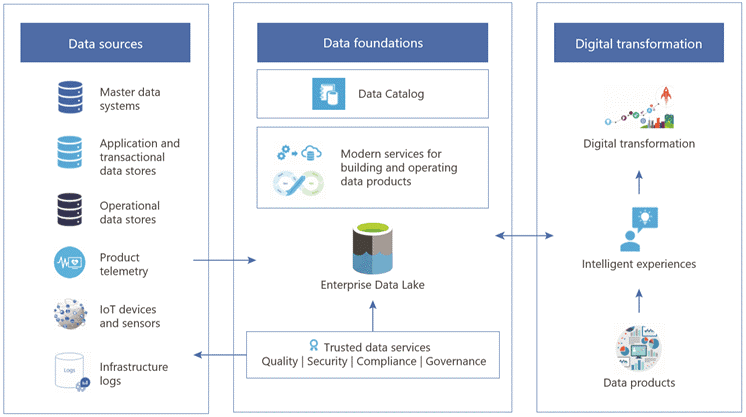

In implementing modern data foundations, Microsoft products and technologies- especially those within the Azure platform- can simplify the implementation of an Enterprise Data Strategy with enterprise ready data services.

Figure 2. The Modern Data Foundations

The Enterprise Data Lake

The Enterprise Data Lake (EDL)—built on Azure Data Lake, Azure Data Factory, and Azure Synapse Analytics—addresses this challenge by serving as the enterprise’s system of intelligence. There, data from across the enterprise can be ingested, conformed, standardized, connected, democratized, and served for enterprise-wide applications in analytics as well as ML/AI.

Trusted Data Services

Data is valuable only insofar as it is trusted. Data quality services include probabilistic, rules-based data quality scanning, as well as closed-loop data publisher workflows. Standardized schemas and shared data and analytics models conform data for shared entities, and data sources from multiple systems (and that don’t include common connector attributes) are unified to generate golden records.

Security and management services are built using Azure Active Directory (AAD), Azure Entitlements Lifecycle Management (ELM), and Azure Key Vault. These services are secure by design, an achievement made possible by abstracting the complexities of creating, managing, and operating security and access management capabilities. That abstraction allows for auto-provisioning, managing AAD security groups and memberships, Azure ELM access packages for data assets, and security keys and certificates for access management. Compliance is handled by automated controls and processes and audit reporting for regulatory standards such as General Data Protection Regulation (GDPR) and the Sarbanes-Oxley Act (SOX).

Strong governance is achieved by operationalizing these and related processes as automated workflows, while still allowing for human touchpoints when needed. Data access management, for example, can be configured to automatically approve data access requests, but can also be configured to require manual review as necessary.

The EDL also includes seamless, metadata-driven integrations with these services so business users can invoke and use them consistently.

Modern Services for Building and Operating Data Products

With Compute to Data and automated DevOps capabilities, organizations can accelerate the time it takes to build, test, deploy, and operate data products.

With the new Compute to Data paradigm in place, organizations can build data products without moving or copying data out of the EDL. Data scientists can use standard SQL and SQL-based interfaces to query data and build products using Azure Synapse Analytics. Azure Databricks enable the use of comprehensive programming languages and runtimes so they can build advanced analytics and ML/AI data products. Both of these services integrate with and enable the development of data products within the EDL, without needing data to be copied and proliferated.

ML Operations services are built on Azure Machine Learning Services and Azure DevOps to automate and democratize DevOps capabilities for ML/AI products. As a result, data scientists can productize secure, compliant, and scalable ML/AI models as intelligent services themselves, without software engineering skills.

The Data Catalog

The Enterprise Data Catalog is the single destination for data consumers to find and gain access to the data and data products they need. The EDL Metadata service sends metadata published to the data lake to the catalog for discovery. Broader data sources—transactional data systems and master data, for example—are also registered in the catalog.

Defining success

The ultimate goal of these initiatives is to facilitate the creation of intelligent data products, and to reduce the time it takes to build, measure, and evolve those products.

Microsoft generates, captures, possesses, and analyzes vast amounts of data. By building the Modern Data Foundations, Microsoft has democratized and consolidated access to that data throughout their organization.

Microsoft developed baseline metrics, which other organizations could/should mirror to measure the efforts of data estate modernization:

- Time to build connected data products

- Time to integrate connected data products into existing applications and services

Reduced cost is a byproduct of the greater digital transformation, but it is not necessarily the end goal. Rather than tackling spend in absolute terms, these initiatives optimize costs, tailoring spend to a business’s unique growth cycles. Post-transformation, spend is more efficient and proportional to growth.

Additionally, the elimination of multiple business unit data platforms reduces the security and governance vulnerabilities that arise as an organization strives to manage so many separate platforms. In the process of this digital transformation, organizations can reduce their financial and reputational risk simply by reducing the exposure created by having so many discrete systems to protect against a breach.

Conclusion

By closely examining Microsoft’s own implementation of their Enterprise Data Strategy, we have a tested model or blueprint for our own organizations’ modernization of the data platform. By using the full range of Microsoft Azure offerings, organizations have enterprise ready tools to assist in otherwise overwhelming tasks of data platform modernization.

As an experienced Microsoft Gold Certified Partner with numerous Data and Cloud specialties, Planet Technologies can assist organizations in planning, designing, and implementing your data modernization and transformation, all the while ensuring your team’s ongoing ability to not only maintain, but continue to refine the data foundations platform.

For more information, please contact [email protected].